SRC/route_timing.c File Reference

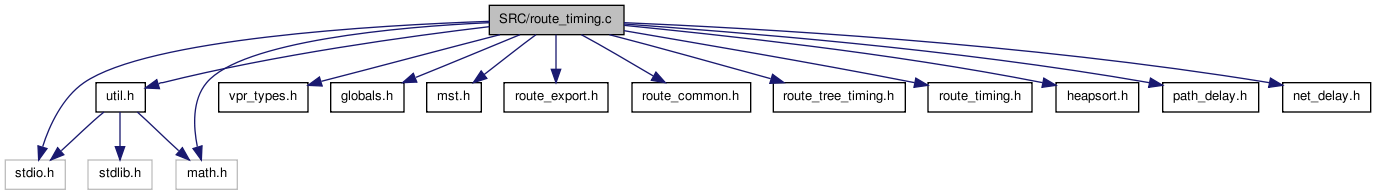

#include <stdio.h>#include <math.h>#include "util.h"#include "vpr_types.h"#include "globals.h"#include "mst.h"#include "route_export.h"#include "route_common.h"#include "route_tree_timing.h"#include "route_timing.h"#include "heapsort.h"#include "path_delay.h"#include "net_delay.h"

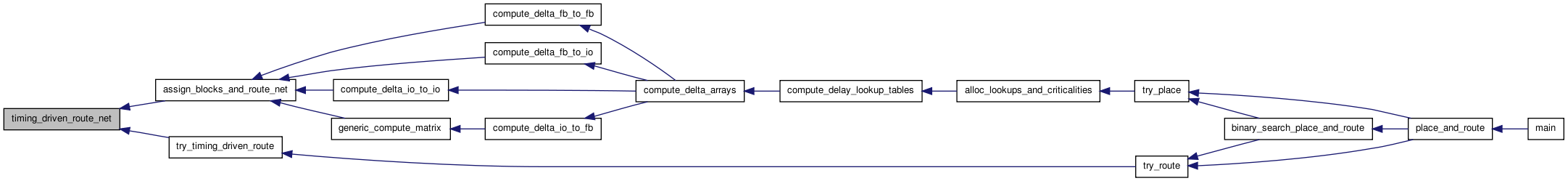

Include dependency graph for route_timing.c:

Go to the source code of this file.

Defines | |

| #define | ROUND_UP(x) (ceil (x - 0.001)) |

| #define | ERROR_TOL 0.0001 |

Functions | |

| static int | get_max_pins_per_net (void) |

| static void | add_route_tree_to_heap (t_rt_node *rt_node, int target_node, float target_criticality, float astar_fac) |

| static void | timing_driven_expand_neighbours (struct s_heap *current, int inet, float bend_cost, float criticality_fac, int target_node, float astar_fac) |

| static float | get_timing_driven_expected_cost (int inode, int target_node, float criticality_fac, float R_upstream) |

| static int | get_expected_segs_to_target (int inode, int target_node, int *num_segs_ortho_dir_ptr) |

| static void | update_rr_base_costs (int inet, float largest_criticality) |

| static void | timing_driven_check_net_delays (float **net_delay) |

| boolean | try_timing_driven_route (struct s_router_opts router_opts, float **net_slack, float **net_delay, t_ivec **fb_opins_used_locally) |

| void | alloc_timing_driven_route_structs (float **pin_criticality_ptr, int **sink_order_ptr, t_rt_node ***rt_node_of_sink_ptr) |

| void | free_timing_driven_route_structs (float *pin_criticality, int *sink_order, t_rt_node **rt_node_of_sink) |

| boolean | timing_driven_route_net (int inet, float pres_fac, float max_criticality, float criticality_exp, float astar_fac, float bend_cost, float *net_slack, float *pin_criticality, int *sink_order, t_rt_node **rt_node_of_sink, float T_crit, float *net_delay) |

Define Documentation

| #define ERROR_TOL 0.0001 |

Definition at line 766 of file route_timing.c.

| #define ROUND_UP | ( | x | ) | (ceil (x - 0.001)) |

Definition at line 612 of file route_timing.c.

Function Documentation

| static void add_route_tree_to_heap | ( | t_rt_node * | rt_node, | |

| int | target_node, | |||

| float | target_criticality, | |||

| float | astar_fac | |||

| ) | [static] |

Definition at line 408 of file route_timing.c.

00412 { 00413 00414 /* Puts the entire partial routing below and including rt_node onto the heap * 00415 * (except for those parts marked as not to be expanded) by calling itself * 00416 * recursively. */ 00417 00418 int inode; 00419 t_rt_node *child_node; 00420 t_linked_rt_edge *linked_rt_edge; 00421 float tot_cost, backward_path_cost, R_upstream; 00422 00423 /* Pre-order depth-first traversal */ 00424 00425 if(rt_node->re_expand) 00426 { 00427 inode = rt_node->inode; 00428 backward_path_cost = target_criticality * rt_node->Tdel; 00429 R_upstream = rt_node->R_upstream; 00430 tot_cost = 00431 backward_path_cost + 00432 astar_fac * get_timing_driven_expected_cost(inode, 00433 target_node, 00434 target_criticality, 00435 R_upstream); 00436 node_to_heap(inode, tot_cost, NO_PREVIOUS, NO_PREVIOUS, 00437 backward_path_cost, R_upstream); 00438 } 00439 00440 linked_rt_edge = rt_node->u.child_list; 00441 00442 while(linked_rt_edge != NULL) 00443 { 00444 child_node = linked_rt_edge->child; 00445 add_route_tree_to_heap(child_node, target_node, 00446 target_criticality, astar_fac); 00447 linked_rt_edge = linked_rt_edge->next; 00448 } 00449 }

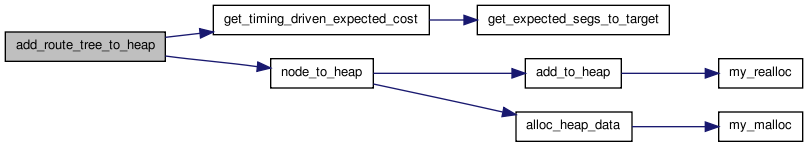

Here is the call graph for this function:

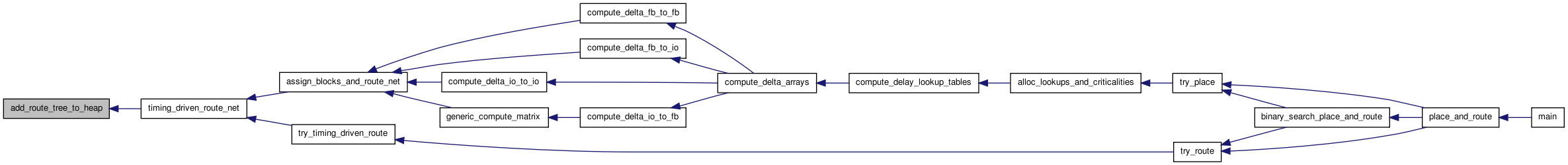

Here is the caller graph for this function:

| void alloc_timing_driven_route_structs | ( | float ** | pin_criticality_ptr, | |

| int ** | sink_order_ptr, | |||

| t_rt_node *** | rt_node_of_sink_ptr | |||

| ) |

Definition at line 186 of file route_timing.c.

00189 { 00190 00191 /* Allocates all the structures needed only by the timing-driven router. */ 00192 00193 int max_pins_per_net; 00194 float *pin_criticality; 00195 int *sink_order; 00196 t_rt_node **rt_node_of_sink; 00197 00198 max_pins_per_net = get_max_pins_per_net(); 00199 00200 pin_criticality = 00201 (float *)my_malloc((max_pins_per_net - 1) * sizeof(float)); 00202 *pin_criticality_ptr = pin_criticality - 1; /* First sink is pin #1. */ 00203 00204 sink_order = (int *)my_malloc((max_pins_per_net - 1) * sizeof(int)); 00205 *sink_order_ptr = sink_order - 1; 00206 00207 rt_node_of_sink = (t_rt_node **) my_malloc((max_pins_per_net - 1) * 00208 sizeof(t_rt_node *)); 00209 *rt_node_of_sink_ptr = rt_node_of_sink - 1; 00210 00211 alloc_route_tree_timing_structs(); 00212 }

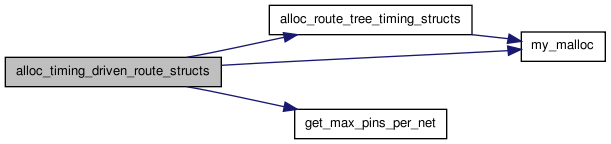

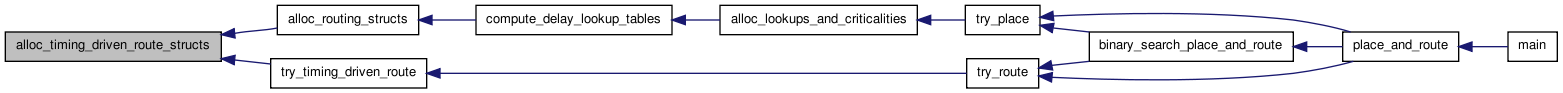

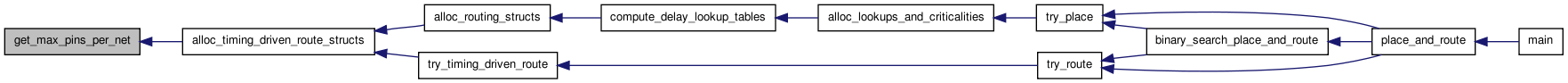

Here is the call graph for this function:

Here is the caller graph for this function:

| void free_timing_driven_route_structs | ( | float * | pin_criticality, | |

| int * | sink_order, | |||

| t_rt_node ** | rt_node_of_sink | |||

| ) |

Definition at line 216 of file route_timing.c.

00219 { 00220 00221 /* Frees all the stuctures needed only by the timing-driven router. */ 00222 00223 free(pin_criticality + 1); /* Starts at index 1. */ 00224 free(sink_order + 1); 00225 free(rt_node_of_sink + 1); 00226 free_route_tree_timing_structs(); 00227 }

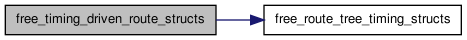

Here is the call graph for this function:

Here is the caller graph for this function:

| static int get_expected_segs_to_target | ( | int | inode, | |

| int | target_node, | |||

| int * | num_segs_ortho_dir_ptr | |||

| ) | [static] |

Definition at line 616 of file route_timing.c.

00619 { 00620 00621 /* Returns the number of segments the same type as inode that will be needed * 00622 * to reach target_node (not including inode) in each direction (the same * 00623 * direction (horizontal or vertical) as inode and the orthogonal direction).*/ 00624 00625 t_rr_type rr_type; 00626 int target_x, target_y, num_segs_same_dir, cost_index, ortho_cost_index; 00627 int no_need_to_pass_by_clb; 00628 float inv_length, ortho_inv_length, ylow, yhigh, xlow, xhigh; 00629 00630 target_x = rr_node[target_node].xlow; 00631 target_y = rr_node[target_node].ylow; 00632 cost_index = rr_node[inode].cost_index; 00633 inv_length = rr_indexed_data[cost_index].inv_length; 00634 ortho_cost_index = rr_indexed_data[cost_index].ortho_cost_index; 00635 ortho_inv_length = rr_indexed_data[ortho_cost_index].inv_length; 00636 rr_type = rr_node[inode].type; 00637 00638 if(rr_type == CHANX) 00639 { 00640 ylow = rr_node[inode].ylow; 00641 xhigh = rr_node[inode].xhigh; 00642 xlow = rr_node[inode].xlow; 00643 00644 /* Count vertical (orthogonal to inode) segs first. */ 00645 00646 if(ylow > target_y) 00647 { /* Coming from a row above target? */ 00648 *num_segs_ortho_dir_ptr = 00649 ROUND_UP((ylow - target_y + 1.) * ortho_inv_length); 00650 no_need_to_pass_by_clb = 1; 00651 } 00652 else if(ylow < target_y - 1) 00653 { /* Below the FB bottom? */ 00654 *num_segs_ortho_dir_ptr = ROUND_UP((target_y - ylow) * 00655 ortho_inv_length); 00656 no_need_to_pass_by_clb = 1; 00657 } 00658 else 00659 { /* In a row that passes by target FB */ 00660 *num_segs_ortho_dir_ptr = 0; 00661 no_need_to_pass_by_clb = 0; 00662 } 00663 00664 /* Now count horizontal (same dir. as inode) segs. */ 00665 00666 if(xlow > target_x + no_need_to_pass_by_clb) 00667 { 00668 num_segs_same_dir = 00669 ROUND_UP((xlow - no_need_to_pass_by_clb - 00670 target_x) * inv_length); 00671 } 00672 else if(xhigh < target_x - no_need_to_pass_by_clb) 00673 { 00674 num_segs_same_dir = 00675 ROUND_UP((target_x - no_need_to_pass_by_clb - 00676 xhigh) * inv_length); 00677 } 00678 else 00679 { 00680 num_segs_same_dir = 0; 00681 } 00682 } 00683 00684 else 00685 { /* inode is a CHANY */ 00686 ylow = rr_node[inode].ylow; 00687 yhigh = rr_node[inode].yhigh; 00688 xlow = rr_node[inode].xlow; 00689 00690 /* Count horizontal (orthogonal to inode) segs first. */ 00691 00692 if(xlow > target_x) 00693 { /* Coming from a column right of target? */ 00694 *num_segs_ortho_dir_ptr = 00695 ROUND_UP((xlow - target_x + 1.) * ortho_inv_length); 00696 no_need_to_pass_by_clb = 1; 00697 } 00698 else if(xlow < target_x - 1) 00699 { /* Left of and not adjacent to the FB? */ 00700 *num_segs_ortho_dir_ptr = ROUND_UP((target_x - xlow) * 00701 ortho_inv_length); 00702 no_need_to_pass_by_clb = 1; 00703 } 00704 else 00705 { /* In a column that passes by target FB */ 00706 *num_segs_ortho_dir_ptr = 0; 00707 no_need_to_pass_by_clb = 0; 00708 } 00709 00710 /* Now count vertical (same dir. as inode) segs. */ 00711 00712 if(ylow > target_y + no_need_to_pass_by_clb) 00713 { 00714 num_segs_same_dir = 00715 ROUND_UP((ylow - no_need_to_pass_by_clb - 00716 target_y) * inv_length); 00717 } 00718 else if(yhigh < target_y - no_need_to_pass_by_clb) 00719 { 00720 num_segs_same_dir = 00721 ROUND_UP((target_y - no_need_to_pass_by_clb - 00722 yhigh) * inv_length); 00723 } 00724 else 00725 { 00726 num_segs_same_dir = 0; 00727 } 00728 } 00729 00730 return (num_segs_same_dir); 00731 }

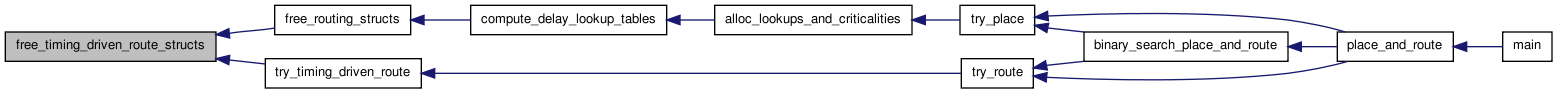

Here is the caller graph for this function:

| static int get_max_pins_per_net | ( | void | ) | [static] |

Definition at line 231 of file route_timing.c.

00232 { 00233 00234 /* Returns the largest number of pins on any non-global net. */ 00235 00236 int inet, max_pins_per_net; 00237 00238 max_pins_per_net = 0; 00239 for(inet = 0; inet < num_nets; inet++) 00240 { 00241 if(net[inet].is_global == FALSE) 00242 { 00243 max_pins_per_net = 00244 max(max_pins_per_net, (net[inet].num_sinks + 1)); 00245 } 00246 } 00247 00248 return (max_pins_per_net); 00249 }

Here is the caller graph for this function:

| static float get_timing_driven_expected_cost | ( | int | inode, | |

| int | target_node, | |||

| float | criticality_fac, | |||

| float | R_upstream | |||

| ) | [static] |

Definition at line 546 of file route_timing.c.

00550 { 00551 00552 /* Determines the expected cost (due to both delay and resouce cost) to reach * 00553 * the target node from inode. It doesn't include the cost of inode -- * 00554 * that's already in the "known" path_cost. */ 00555 00556 t_rr_type rr_type; 00557 int cost_index, ortho_cost_index, num_segs_same_dir, num_segs_ortho_dir; 00558 float expected_cost, cong_cost, Tdel; 00559 00560 rr_type = rr_node[inode].type; 00561 00562 if(rr_type == CHANX || rr_type == CHANY) 00563 { 00564 num_segs_same_dir = 00565 get_expected_segs_to_target(inode, target_node, 00566 &num_segs_ortho_dir); 00567 cost_index = rr_node[inode].cost_index; 00568 ortho_cost_index = rr_indexed_data[cost_index].ortho_cost_index; 00569 00570 cong_cost = 00571 num_segs_same_dir * rr_indexed_data[cost_index].base_cost + 00572 num_segs_ortho_dir * 00573 rr_indexed_data[ortho_cost_index].base_cost; 00574 cong_cost += 00575 rr_indexed_data[IPIN_COST_INDEX].base_cost + 00576 rr_indexed_data[SINK_COST_INDEX].base_cost; 00577 00578 Tdel = num_segs_same_dir * rr_indexed_data[cost_index].T_linear + 00579 num_segs_ortho_dir * 00580 rr_indexed_data[ortho_cost_index].T_linear + 00581 num_segs_same_dir * num_segs_same_dir * 00582 rr_indexed_data[cost_index].T_quadratic + 00583 num_segs_ortho_dir * num_segs_ortho_dir * 00584 rr_indexed_data[ortho_cost_index].T_quadratic + 00585 R_upstream * (num_segs_same_dir * 00586 rr_indexed_data[cost_index].C_load + 00587 num_segs_ortho_dir * 00588 rr_indexed_data[ortho_cost_index].C_load); 00589 00590 Tdel += rr_indexed_data[IPIN_COST_INDEX].T_linear; 00591 00592 expected_cost = 00593 criticality_fac * Tdel + (1. - criticality_fac) * cong_cost; 00594 return (expected_cost); 00595 } 00596 00597 else if(rr_type == IPIN) 00598 { /* Change if you're allowing route-throughs */ 00599 return (rr_indexed_data[SINK_COST_INDEX].base_cost); 00600 } 00601 00602 else 00603 { /* Change this if you want to investigate route-throughs */ 00604 return (0.); 00605 } 00606 }

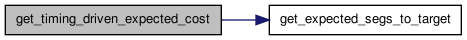

Here is the call graph for this function:

Here is the caller graph for this function:

| static void timing_driven_check_net_delays | ( | float ** | net_delay | ) | [static] |

Definition at line 769 of file route_timing.c.

00770 { 00771 00772 /* Checks that the net delays computed incrementally during timing driven * 00773 * routing match those computed from scratch by the net_delay.c module. */ 00774 00775 int inet, ipin; 00776 float **net_delay_check; 00777 struct s_linked_vptr *ch_list_head_net_delay_check; 00778 00779 net_delay_check = alloc_net_delay(&ch_list_head_net_delay_check); 00780 load_net_delay_from_routing(net_delay_check); 00781 00782 for(inet = 0; inet < num_nets; inet++) 00783 { 00784 for(ipin = 1; ipin <= net[inet].num_sinks; ipin++) 00785 { 00786 if(net_delay_check[inet][ipin] == 0.) 00787 { /* Should be only GLOBAL nets */ 00788 if(net_delay[inet][ipin] != 0.) 00789 { 00790 printf 00791 ("Error in timing_driven_check_net_delays: net %d pin %d." 00792 "\tIncremental calc. net_delay is %g, but from scratch " 00793 "net delay is %g.\n", inet, ipin, 00794 net_delay[inet][ipin], 00795 net_delay_check[inet][ipin]); 00796 exit(1); 00797 } 00798 } 00799 else 00800 { 00801 if(fabs 00802 (1. - 00803 net_delay[inet][ipin] / 00804 net_delay_check[inet][ipin]) > ERROR_TOL) 00805 { 00806 printf 00807 ("Error in timing_driven_check_net_delays: net %d pin %d." 00808 "\tIncremental calc. net_delay is %g, but from scratch " 00809 "net delay is %g.\n", inet, ipin, 00810 net_delay[inet][ipin], 00811 net_delay_check[inet][ipin]); 00812 exit(1); 00813 } 00814 } 00815 } 00816 } 00817 00818 free_net_delay(net_delay_check, &ch_list_head_net_delay_check); 00819 printf("Completed net delay value cross check successfully.\n"); 00820 }

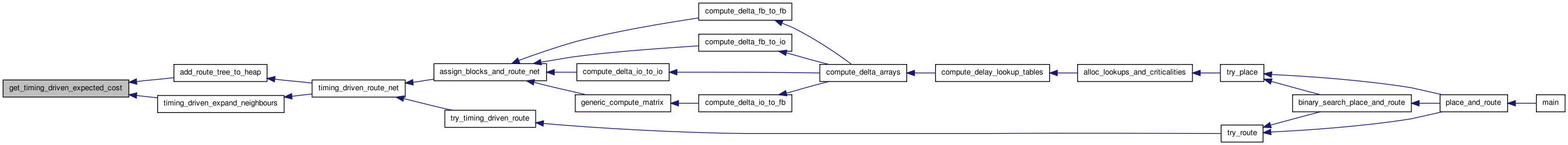

Here is the call graph for this function:

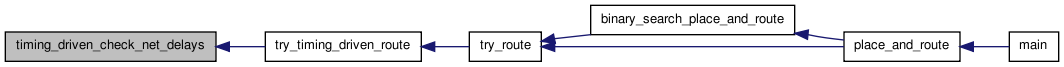

Here is the caller graph for this function:

| static void timing_driven_expand_neighbours | ( | struct s_heap * | current, | |

| int | inet, | |||

| float | bend_cost, | |||

| float | criticality_fac, | |||

| int | target_node, | |||

| float | astar_fac | |||

| ) | [static] |

Definition at line 453 of file route_timing.c.

00459 { 00460 00461 /* Puts all the rr_nodes adjacent to current on the heap. rr_nodes outside * 00462 * the expanded bounding box specified in route_bb are not added to the * 00463 * heap. */ 00464 00465 int iconn, to_node, num_edges, inode, iswitch, target_x, target_y; 00466 t_rr_type from_type, to_type; 00467 float new_tot_cost, old_back_pcost, new_back_pcost, R_upstream; 00468 float new_R_upstream, Tdel; 00469 00470 inode = current->index; 00471 old_back_pcost = current->backward_path_cost; 00472 R_upstream = current->R_upstream; 00473 num_edges = rr_node[inode].num_edges; 00474 00475 target_x = rr_node[target_node].xhigh; 00476 target_y = rr_node[target_node].yhigh; 00477 00478 for(iconn = 0; iconn < num_edges; iconn++) 00479 { 00480 to_node = rr_node[inode].edges[iconn]; 00481 00482 if(rr_node[to_node].xhigh < route_bb[inet].xmin || 00483 rr_node[to_node].xlow > route_bb[inet].xmax || 00484 rr_node[to_node].yhigh < route_bb[inet].ymin || 00485 rr_node[to_node].ylow > route_bb[inet].ymax) 00486 continue; /* Node is outside (expanded) bounding box. */ 00487 00488 /* Prune away IPINs that lead to blocks other than the target one. Avoids * 00489 * the issue of how to cost them properly so they don't get expanded before * 00490 * more promising routes, but makes route-throughs (via CLBs) impossible. * 00491 * Change this if you want to investigate route-throughs. */ 00492 00493 to_type = rr_node[to_node].type; 00494 if(to_type == IPIN && (rr_node[to_node].xhigh != target_x || 00495 rr_node[to_node].yhigh != target_y)) 00496 continue; 00497 00498 00499 /* new_back_pcost stores the "known" part of the cost to this node -- the * 00500 * congestion cost of all the routing resources back to the existing route * 00501 * plus the known delay of the total path back to the source. new_tot_cost * 00502 * is this "known" backward cost + an expected cost to get to the target. */ 00503 00504 new_back_pcost = old_back_pcost + (1. - criticality_fac) * 00505 get_rr_cong_cost(to_node); 00506 00507 iswitch = rr_node[inode].switches[iconn]; 00508 if(switch_inf[iswitch].buffered) 00509 { 00510 new_R_upstream = switch_inf[iswitch].R; 00511 } 00512 else 00513 { 00514 new_R_upstream = R_upstream + switch_inf[iswitch].R; 00515 } 00516 00517 Tdel = 00518 rr_node[to_node].C * (new_R_upstream + 00519 0.5 * rr_node[to_node].R); 00520 Tdel += switch_inf[iswitch].Tdel; 00521 new_R_upstream += rr_node[to_node].R; 00522 new_back_pcost += criticality_fac * Tdel; 00523 00524 if(bend_cost != 0.) 00525 { 00526 from_type = rr_node[inode].type; 00527 to_type = rr_node[to_node].type; 00528 if((from_type == CHANX && to_type == CHANY) || 00529 (from_type == CHANY && to_type == CHANX)) 00530 new_back_pcost += bend_cost; 00531 } 00532 00533 new_tot_cost = new_back_pcost + astar_fac * 00534 get_timing_driven_expected_cost(to_node, target_node, 00535 criticality_fac, 00536 new_R_upstream); 00537 00538 node_to_heap(to_node, new_tot_cost, inode, iconn, new_back_pcost, 00539 new_R_upstream); 00540 00541 } /* End for all neighbours */ 00542 }

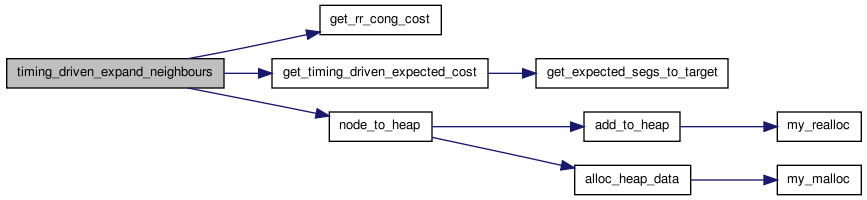

Here is the call graph for this function:

Here is the caller graph for this function:

| boolean timing_driven_route_net | ( | int | inet, | |

| float | pres_fac, | |||

| float | max_criticality, | |||

| float | criticality_exp, | |||

| float | astar_fac, | |||

| float | bend_cost, | |||

| float * | net_slack, | |||

| float * | pin_criticality, | |||

| int * | sink_order, | |||

| t_rt_node ** | rt_node_of_sink, | |||

| float | T_crit, | |||

| float * | net_delay | |||

| ) |

Definition at line 253 of file route_timing.c.

00265 { 00266 00267 /* Returns TRUE as long is found some way to hook up this net, even if that * 00268 * way resulted in overuse of resources (congestion). If there is no way * 00269 * to route this net, even ignoring congestion, it returns FALSE. In this * 00270 * case the rr_graph is disconnected and you can give up. */ 00271 00272 int ipin, num_sinks, itarget, target_pin, target_node, inode; 00273 float target_criticality, old_tcost, new_tcost, largest_criticality, 00274 pin_crit; 00275 float old_back_cost, new_back_cost; 00276 t_rt_node *rt_root; 00277 struct s_heap *current; 00278 struct s_trace *new_route_start_tptr; 00279 00280 /* Rip-up any old routing. */ 00281 00282 pathfinder_update_one_cost(trace_head[inet], -1, pres_fac); 00283 free_traceback(inet); 00284 00285 for(ipin = 1; ipin <= net[inet].num_sinks; ipin++) 00286 { /* For all sinks */ 00287 pin_crit = max(max_criticality - net_slack[ipin] / T_crit, 0.); 00288 pin_crit = pow(pin_crit, criticality_exp); 00289 pin_crit = min(pin_crit, max_criticality); 00290 pin_criticality[ipin] = pin_crit; 00291 } 00292 00293 num_sinks = net[inet].num_sinks; 00294 heapsort(sink_order, pin_criticality, num_sinks); 00295 00296 /* Update base costs according to fanout and criticality rules */ 00297 00298 largest_criticality = pin_criticality[sink_order[1]]; 00299 update_rr_base_costs(inet, largest_criticality); 00300 00301 mark_ends(inet); /* Only needed to check for multiply-connected SINKs */ 00302 00303 rt_root = init_route_tree_to_source(inet); 00304 00305 for(itarget = 1; itarget <= num_sinks; itarget++) 00306 { 00307 target_pin = sink_order[itarget]; 00308 target_node = net_rr_terminals[inet][target_pin]; 00309 00310 target_criticality = pin_criticality[target_pin]; 00311 00312 add_route_tree_to_heap(rt_root, target_node, target_criticality, 00313 astar_fac); 00314 00315 current = get_heap_head(); 00316 00317 if(current == NULL) 00318 { /* Infeasible routing. No possible path for net. */ 00319 reset_path_costs(); 00320 free_route_tree(rt_root); 00321 return (FALSE); 00322 } 00323 00324 inode = current->index; 00325 00326 while(inode != target_node) 00327 { 00328 old_tcost = rr_node_route_inf[inode].path_cost; 00329 new_tcost = current->cost; 00330 00331 if(old_tcost > 0.99 * HUGE_FLOAT) /* First time touched. */ 00332 old_back_cost = HUGE_FLOAT; 00333 else 00334 old_back_cost = 00335 rr_node_route_inf[inode].backward_path_cost; 00336 00337 new_back_cost = current->backward_path_cost; 00338 00339 /* I only re-expand a node if both the "known" backward cost is lower * 00340 * in the new expansion (this is necessary to prevent loops from * 00341 * forming in the routing and causing havoc) *and* the expected total * 00342 * cost to the sink is lower than the old value. Different R_upstream * 00343 * values could make a path with lower back_path_cost less desirable * 00344 * than one with higher cost. Test whether or not I should disallow * 00345 * re-expansion based on a higher total cost. */ 00346 00347 if(old_tcost > new_tcost && old_back_cost > new_back_cost) 00348 { 00349 rr_node_route_inf[inode].prev_node = 00350 current->u.prev_node; 00351 rr_node_route_inf[inode].prev_edge = 00352 current->prev_edge; 00353 rr_node_route_inf[inode].path_cost = new_tcost; 00354 rr_node_route_inf[inode].backward_path_cost = 00355 new_back_cost; 00356 00357 if(old_tcost > 0.99 * HUGE_FLOAT) /* First time touched. */ 00358 add_to_mod_list(&rr_node_route_inf[inode]. 00359 path_cost); 00360 00361 timing_driven_expand_neighbours(current, inet, 00362 bend_cost, 00363 target_criticality, 00364 target_node, 00365 astar_fac); 00366 } 00367 00368 free_heap_data(current); 00369 current = get_heap_head(); 00370 00371 if(current == NULL) 00372 { /* Impossible routing. No path for net. */ 00373 reset_path_costs(); 00374 free_route_tree(rt_root); 00375 return (FALSE); 00376 } 00377 00378 inode = current->index; 00379 } 00380 00381 /* NB: In the code below I keep two records of the partial routing: the * 00382 * traceback and the route_tree. The route_tree enables fast recomputation * 00383 * of the Elmore delay to each node in the partial routing. The traceback * 00384 * lets me reuse all the routines written for breadth-first routing, which * 00385 * all take a traceback structure as input. Before this routine exits the * 00386 * route_tree structure is destroyed; only the traceback is needed at that * 00387 * point. */ 00388 00389 rr_node_route_inf[inode].target_flag--; /* Connected to this SINK. */ 00390 new_route_start_tptr = update_traceback(current, inet); 00391 rt_node_of_sink[target_pin] = update_route_tree(current); 00392 free_heap_data(current); 00393 pathfinder_update_one_cost(new_route_start_tptr, 1, pres_fac); 00394 00395 empty_heap(); 00396 reset_path_costs(); 00397 } 00398 00399 /* For later timing analysis. */ 00400 00401 update_net_delays_from_route_tree(net_delay, rt_node_of_sink, inet); 00402 free_route_tree(rt_root); 00403 return (TRUE); 00404 }

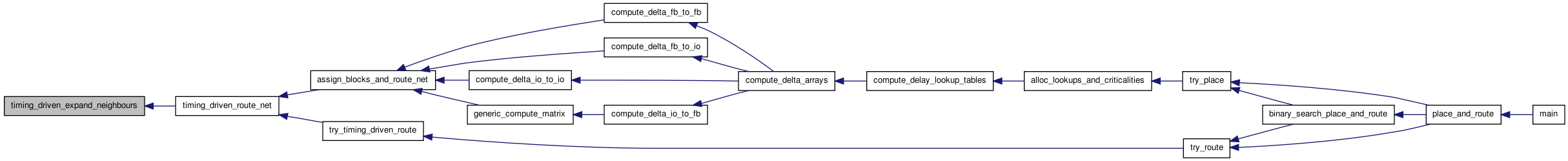

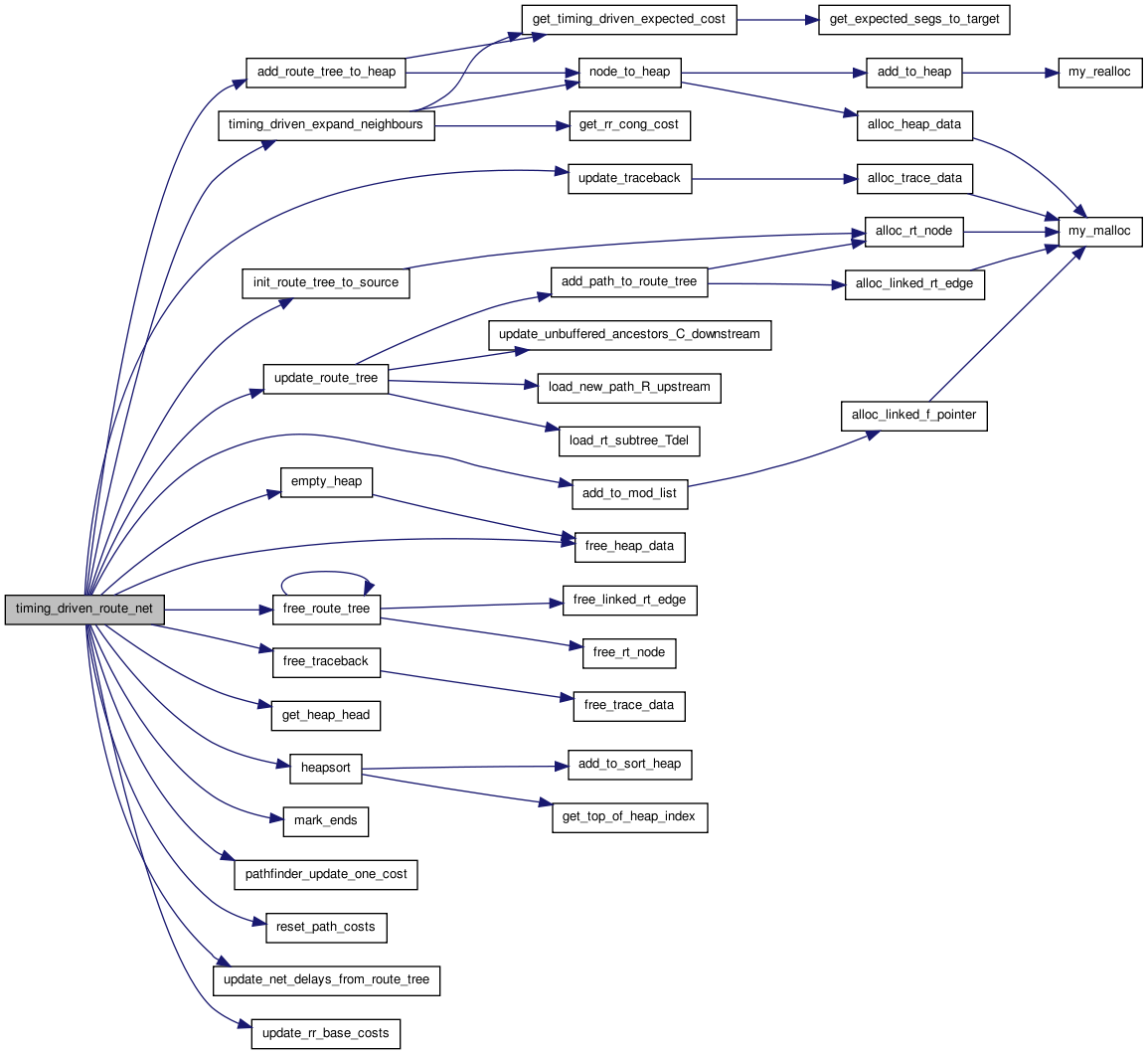

Here is the call graph for this function:

Here is the caller graph for this function:

| boolean try_timing_driven_route | ( | struct s_router_opts | router_opts, | |

| float ** | net_slack, | |||

| float ** | net_delay, | |||

| t_ivec ** | fb_opins_used_locally | |||

| ) |

Definition at line 50 of file route_timing.c.

00054 { 00055 00056 /* Timing-driven routing algorithm. The timing graph (includes net_slack) * 00057 * must have already been allocated, and net_delay must have been allocated. * 00058 * Returns TRUE if the routing succeeds, FALSE otherwise. */ 00059 00060 int itry, inet, ipin; 00061 boolean success, is_routable, rip_up_local_opins; 00062 float *pin_criticality; /* [1..max_pins_per_net-1]. */ 00063 int *sink_order; /* [1..max_pins_per_net-1]. */ 00064 t_rt_node **rt_node_of_sink; /* [1..max_pins_per_net-1]. */ 00065 float T_crit, pres_fac; 00066 00067 alloc_timing_driven_route_structs(&pin_criticality, &sink_order, 00068 &rt_node_of_sink); 00069 00070 /* First do one routing iteration ignoring congestion and marking all sinks * 00071 * on each net as critical to get reasonable net delay estimates. */ 00072 00073 for(inet = 0; inet < num_nets; inet++) 00074 { 00075 if(net[inet].is_global == FALSE) 00076 { 00077 for(ipin = 1; ipin <= net[inet].num_sinks; ipin++) 00078 net_slack[inet][ipin] = 0.; 00079 } 00080 else 00081 { /* Set delay of global signals to zero. */ 00082 for(ipin = 1; ipin <= net[inet].num_sinks; ipin++) 00083 net_delay[inet][ipin] = 0.; 00084 } 00085 } 00086 00087 T_crit = 1.; 00088 pres_fac = router_opts.first_iter_pres_fac; /* Typically 0 -> ignore cong. */ 00089 00090 for(itry = 1; itry <= router_opts.max_router_iterations; itry++) 00091 { 00092 00093 for(inet = 0; inet < num_nets; inet++) 00094 { 00095 if(net[inet].is_global == FALSE) 00096 { /* Skip global nets. */ 00097 00098 is_routable = 00099 timing_driven_route_net(inet, pres_fac, 00100 router_opts. 00101 max_criticality, 00102 router_opts. 00103 criticality_exp, 00104 router_opts.astar_fac, 00105 router_opts.bend_cost, 00106 net_slack[inet], 00107 pin_criticality, 00108 sink_order, 00109 rt_node_of_sink, 00110 T_crit, 00111 net_delay[inet]); 00112 00113 /* Impossible to route? (disconnected rr_graph) */ 00114 00115 if(!is_routable) 00116 { 00117 printf("Routing failed.\n"); 00118 free_timing_driven_route_structs 00119 (pin_criticality, sink_order, 00120 rt_node_of_sink); 00121 return (FALSE); 00122 } 00123 } 00124 } 00125 00126 /* Make sure any FB OPINs used up by subblocks being hooked directly * 00127 * to them are reserved for that purpose. */ 00128 00129 if(itry == 1) 00130 rip_up_local_opins = FALSE; 00131 else 00132 rip_up_local_opins = TRUE; 00133 00134 reserve_locally_used_opins(pres_fac, rip_up_local_opins, 00135 fb_opins_used_locally); 00136 00137 /* Pathfinder guys quit after finding a feasible route. I may want to keep * 00138 * going longer, trying to improve timing. Think about this some. */ 00139 00140 success = feasible_routing(); 00141 if(success) 00142 { 00143 printf 00144 ("Successfully routed after %d routing iterations.\n", 00145 itry); 00146 free_timing_driven_route_structs(pin_criticality, 00147 sink_order, 00148 rt_node_of_sink); 00149 #ifdef DEBUG 00150 timing_driven_check_net_delays(net_delay); 00151 #endif 00152 return (TRUE); 00153 } 00154 00155 if(itry == 1) 00156 { 00157 pres_fac = router_opts.initial_pres_fac; 00158 pathfinder_update_cost(pres_fac, 0.); /* Acc_fac=0 for first iter. */ 00159 } 00160 else 00161 { 00162 pres_fac *= router_opts.pres_fac_mult; 00163 00164 /* Avoid overflow for high iteration counts, even if acc_cost is big */ 00165 pres_fac = min (pres_fac, HUGE_FLOAT / 1e5); 00166 00167 pathfinder_update_cost(pres_fac, router_opts.acc_fac); 00168 } 00169 00170 /* Update slack values by doing another timing analysis. * 00171 * Timing_driven_route_net updated the net delay values. */ 00172 00173 load_timing_graph_net_delays(net_delay); 00174 T_crit = load_net_slack(net_slack, 0); 00175 printf("T_crit: %g.\n", T_crit); 00176 } 00177 00178 printf("Routing failed.\n"); 00179 free_timing_driven_route_structs(pin_criticality, sink_order, 00180 rt_node_of_sink); 00181 return (FALSE); 00182 }

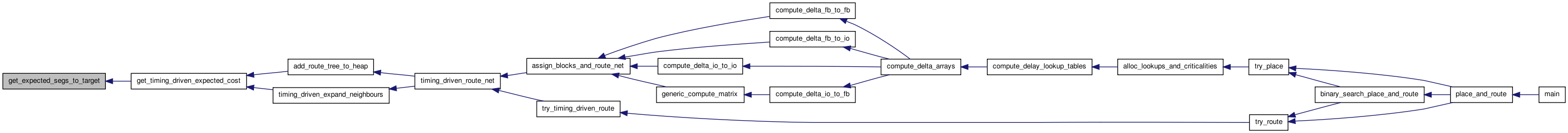

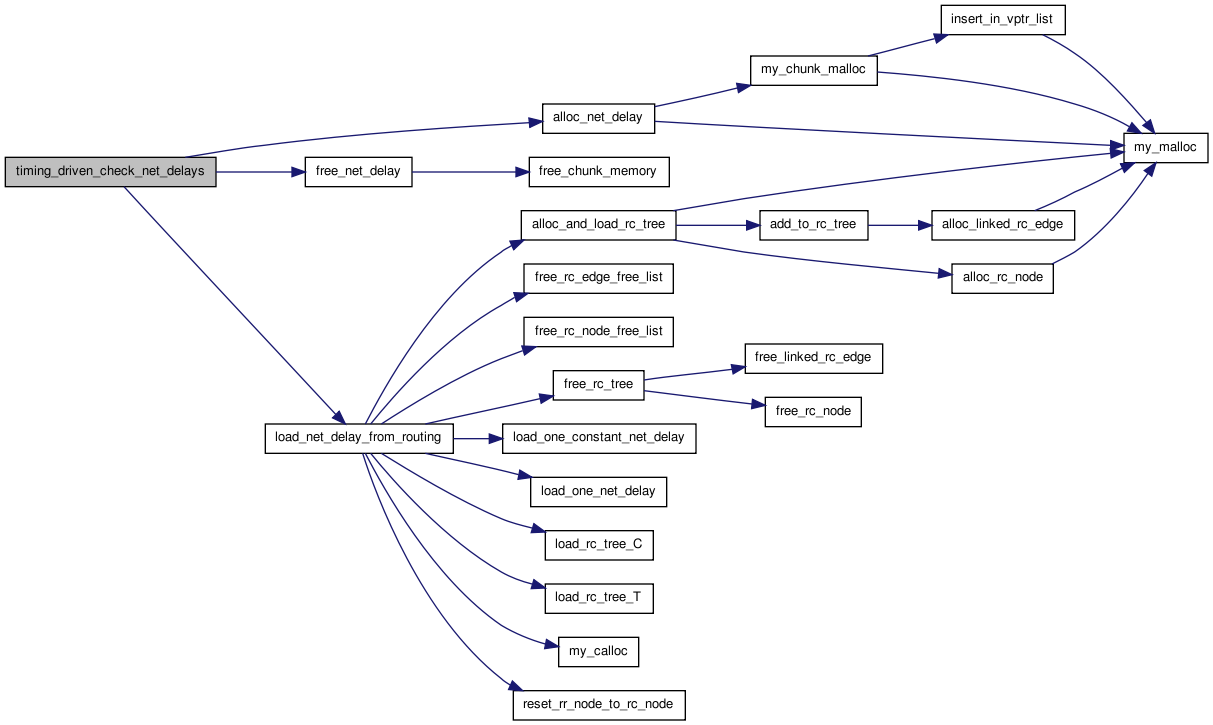

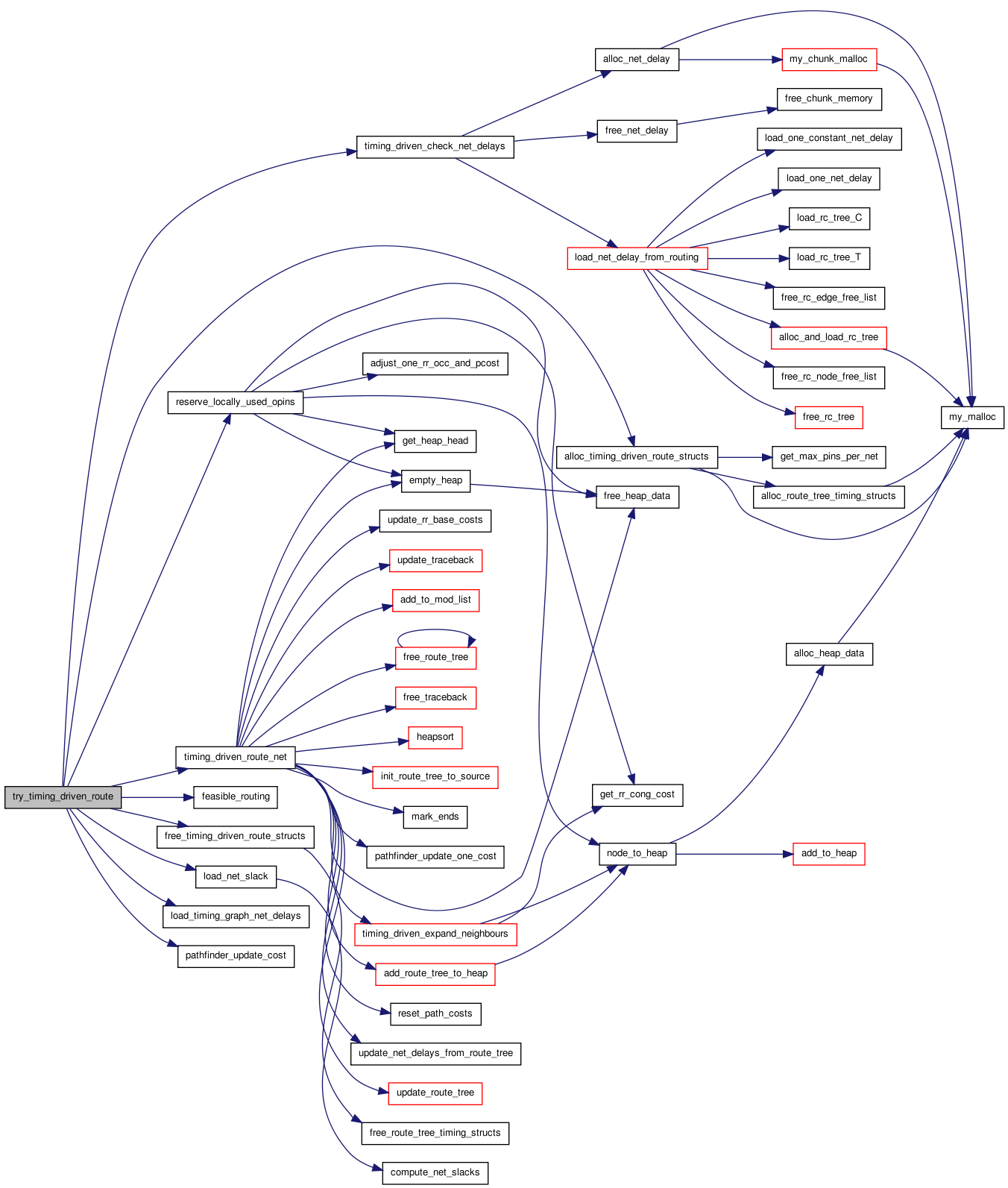

Here is the call graph for this function:

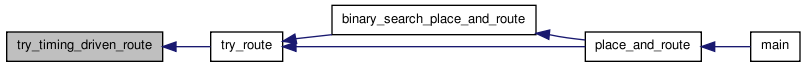

Here is the caller graph for this function:

| static void update_rr_base_costs | ( | int | inet, | |

| float | largest_criticality | |||

| ) | [static] |

Definition at line 735 of file route_timing.c.

00737 { 00738 00739 /* Changes the base costs of different types of rr_nodes according to the * 00740 * criticality, fanout, etc. of the current net being routed (inet). */ 00741 00742 float fanout, factor; 00743 int index; 00744 00745 fanout = net[inet].num_sinks; 00746 00747 /* Other reasonable values for factor include fanout and 1 */ 00748 factor = sqrt(fanout); 00749 00750 for(index = CHANX_COST_INDEX_START; index < num_rr_indexed_data; index++) 00751 { 00752 if(rr_indexed_data[index].T_quadratic > 0.) 00753 { /* pass transistor */ 00754 rr_indexed_data[index].base_cost = 00755 rr_indexed_data[index].saved_base_cost * factor; 00756 } 00757 else 00758 { 00759 rr_indexed_data[index].base_cost = 00760 rr_indexed_data[index].saved_base_cost; 00761 } 00762 } 00763 }

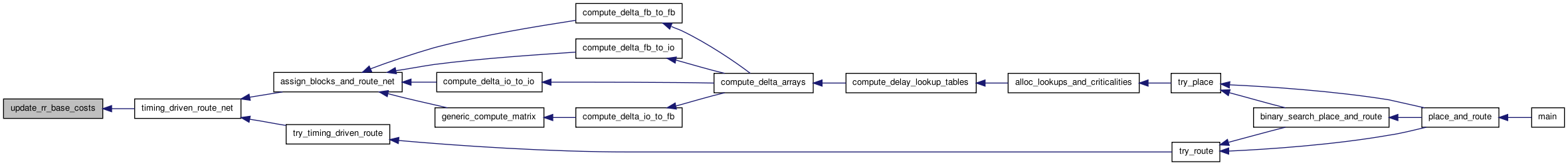

Here is the caller graph for this function:

1.6.1

1.6.1