vpr/SRC/pack/cluster.h File Reference

This graph shows which files directly or indirectly include this file:

This graph shows which files directly or indirectly include this file:Go to the source code of this file.

Functions | |

| void | do_clustering (const t_arch *arch, int num_models, boolean global_clocks, boolean *is_clock, boolean hill_climbing_flag, char *out_fname, boolean timing_driven, enum e_cluster_seed cluster_seed_type, float alpha, float beta, int recompute_timing_after, float block_delay, float intra_cluster_net_delay, float inter_cluster_net_delay, float aspect, boolean allow_unrelated_clustering, boolean allow_early_exit, boolean connection_driven, enum e_packer_algorithm packer_algorithm, boolean hack_no_legal_frac_lut, boolean hack_safe_latch) |

| int | get_cluster_of_block (int blkidx) |

Function Documentation

| void do_clustering | ( | const t_arch * | arch, |

| int | num_models, | ||

| boolean | global_clocks, | ||

| boolean * | is_clock, | ||

| boolean | hill_climbing_flag, | ||

| char * | out_fname, | ||

| boolean | timing_driven, | ||

| enum e_cluster_seed | cluster_seed_type, | ||

| float | alpha, | ||

| float | beta, | ||

| int | recompute_timing_after, | ||

| float | block_delay, | ||

| float | intra_cluster_net_delay, | ||

| float | inter_cluster_net_delay, | ||

| float | aspect, | ||

| boolean | allow_unrelated_clustering, | ||

| boolean | allow_early_exit, | ||

| boolean | connection_driven, | ||

| enum e_packer_algorithm | packer_algorithm, | ||

| boolean | hack_no_legal_frac_lut, | ||

| boolean | hack_safe_latch | ||

| ) |

Does the actual work of clustering multiple VPACK_LUT+FF logic blocks into clusters.

note: I allow timing_driven and connection_driven to be simultaneously true in this case, connection_driven is responsible for all clustering decisions but timing analysis is still enabled (useful for viewing the constant delay results) Algorithm employed: 1. Find type that can legally hold block and create cluster with pb info 2. Populate started cluster 3. Repeat 1 until no more blocks need to be clustered

Definition at line 223 of file cluster.c.

{

int istart, i, j, k, m, iblk;

int blocks_since_last_analysis;

int next_blk, prev_blk;

int num_blocks_hill_added;

int num_logical_blocks_clustered;

int max_cluster_size, cur_cluster_size, max_primitive_inputs, cur_primitive_inputs, max_pb_depth, cur_pb_depth;

int *hill_climbing_inputs_avail;

t_block *clb;

t_pb *cur_pb;

int num_clb;

boolean critical_path_minimized;

boolean early_exit;

enum e_block_pack_status block_pack_status;

int *num_used_instances_type, *num_instances_type; /* [0..num_types] Holds array for total number of each cluster_type available */

float **net_slack = NULL;

float num_paths_scaling, distance_scaling;

float crit;

hack_frac_lut_no_legality = hack_no_legal_frac_lut;

hack_force_safe_latch = hack_safe_latch;

/* TODO: This is memory inefficient, fix if causes problems */

clb = my_calloc(num_logical_blocks,sizeof(t_block));

num_clb = 0;

istart = NO_CLUSTER;

/* determine bound on cluster size and primitive input size */

max_cluster_size = 0;

max_primitive_inputs = 0;

max_pb_depth = 0;

indexofcrit = 0;

for(i = 0; i < num_types; i++) {

if(EMPTY_TYPE == &type_descriptors[i])

continue;

cur_cluster_size = get_max_cluster_size(type_descriptors[i].pb_type);

cur_primitive_inputs = get_max_primitive_inputs(type_descriptors[i].pb_type);

cur_pb_depth = get_max_pb_depth(type_descriptors[i].pb_type);

if(cur_cluster_size > max_cluster_size) {

max_cluster_size = cur_cluster_size;

}

if(cur_primitive_inputs > max_primitive_inputs) {

max_primitive_inputs = cur_primitive_inputs;

}

if(cur_pb_depth > max_pb_depth) {

max_pb_depth = cur_pb_depth;

}

}

if(hill_climbing_flag) {

hill_climbing_inputs_avail = (int *) my_calloc (max_cluster_size + 1, sizeof(int));

} else {

hill_climbing_inputs_avail = NULL; /* if used, die hard */

}

/* TODO: make better estimate for nx and ny */

nx = ny = 1;

check_clocks (is_clock);

#if 0

check_for_duplicate_inputs ();

#endif

alloc_and_init_clustering (global_clocks, alpha, beta, max_cluster_size, max_primitive_inputs, max_pb_depth, num_models);

blocks_since_last_analysis = 0;

critical_path_minimized = FALSE;

early_exit = FALSE;

num_logical_blocks_clustered = 0;

num_blocks_hill_added = 0;

num_used_instances_type = (int*)my_calloc (num_types, sizeof (int));

num_instances_type = (int*)my_calloc (num_types, sizeof (int));

assert(max_cluster_size < MAX_SHORT); /* Limit maximum number of elements for each cluster */

if (timing_driven){

net_slack = alloc_and_load_pre_packing_timing_graph(block_delay, inter_cluster_net_delay, arch->models);

load_net_slack(net_slack, 0);

criticality=(float*)my_calloc(num_logical_blocks,sizeof(float));

critindexarray=(int*)my_malloc(num_logical_blocks*sizeof(int));

for(i = 0; i < num_logical_blocks; i++) {

critindexarray[i] = i;

}

/* Calculate criticality based on slacks and tie breakers (# paths, distance from source) */

for(i = 0; i < num_tnodes; i++) {

iblk = tnode[i].block;

num_paths_scaling = SCALE_NUM_PATHS * (float)tnode[i].normalized_total_critical_paths;

distance_scaling = SCALE_DISTANCE_VAL * (float)tnode[i].normalized_T_arr;

crit = (1 - tnode[i].normalized_slack) + num_paths_scaling + distance_scaling;

if(criticality[iblk] < crit) {

criticality[iblk] = crit;

}

}

net_pin_backward_criticality = (float**)my_calloc(num_logical_nets, sizeof(float*));

net_pin_forward_criticality = (float**)my_calloc(num_logical_nets, sizeof(float*));

for(i = 0; i < num_logical_nets; i++) {

net_pin_backward_criticality[i] = (float *)my_calloc(vpack_net[i].num_sinks + 1, sizeof(float));

net_pin_forward_criticality[i] = (float *)my_calloc(vpack_net[i].num_sinks + 1, sizeof(float));

for(j = 0; j <= vpack_net[i].num_sinks; j++) {

net_pin_backward_criticality[i][j] = criticality[vpack_net[i].node_block[j]];

net_pin_forward_criticality[i][j] = criticality[vpack_net[i].node_block[0]];

}

}

heapsort(critindexarray, criticality, num_logical_blocks, 1);

/*print_critical_path("clustering_critical_path.echo");*/

if (cluster_seed_type == VPACK_TIMING){

istart=get_most_critical_unclustered_block();

}

else {/*max input seed*/

printf("get_seed_logical_block_with_most_ext_inputs\n");

istart = get_seed_logical_block_with_most_ext_inputs(max_primitive_inputs);

}

}

else /*cluster seed is max input (since there is no timing information)*/{

istart = get_seed_logical_block_with_most_ext_inputs(max_primitive_inputs);

}

while (istart != NO_CLUSTER) {

reset_legalizer_for_cluster(&clb[num_clb]);

/* start a new cluster and reset all stats */

start_new_cluster(packer_algorithm, arch, &clb[num_clb], num_clb, istart, aspect, num_used_instances_type, num_instances_type, num_models, max_cluster_size, max_primitive_inputs);

if(logical_block[istart].type != VPACK_INPAD && logical_block[istart].type != VPACK_OUTPAD)

{

printf("Complex Block %d: %s type %s\n", num_clb, clb[num_clb].name, clb[num_clb].type->name);

fflush(stdout);

}

update_cluster_stats (istart, num_clb, is_clock, global_clocks, alpha, beta, timing_driven, connection_driven);

num_clb++;

num_logical_blocks_clustered ++;

if (timing_driven && !early_exit) {

blocks_since_last_analysis++;

/*it doesn't make sense to do a timing analysis here since there*

*is only one logical_block clustered it would not change anything */

}

/* First try to pack primitive into cluster, select next block based on closest open sibling if available */

cur_pb = logical_block[istart].pb->parent_pb;

prev_blk = NO_CLUSTER;

while (cur_pb) {

if(packer_algorithm == PACK_BRUTE_FORCE) { /* if using brute force approach, look at all possible free blocks in cluster */

cur_pb = clb[num_clb - 1].pb;

}

next_blk = get_logical_block_for_cluster(packer_algorithm,

cur_pb,

is_clock,

allow_unrelated_clustering);

block_pack_status = alloc_and_load_pb(packer_algorithm, cur_pb->pb_graph_node, next_blk, cur_pb, num_models, max_cluster_size, max_primitive_inputs);

if(block_pack_status != BLK_PASSED) {

if(next_blk != NO_CLUSTER && prev_blk != next_blk) {

if(block_pack_status == BLK_FAILED_ROUTE) {

#ifdef DEBUG_FAILED_PACKING_CANDIDATES

printf("NO_ROUTE:%s#%d|%s ", logical_block[next_blk].name, next_blk, logical_block[next_blk].model->name);

#else

printf(".");

#endif

} else {

#ifdef DEBUG_FAILED_PACKING_CANDIDATES

printf("FAILED_CHECK:%s#%d|%s check %d", logical_block[next_blk].name, next_blk, logical_block[next_blk].model->name, block_pack_status);

#else

printf(".");

#endif

}

prev_blk = next_blk;

} else {

prev_blk = NO_CLUSTER;

cur_pb = cur_pb->parent_pb;

}

continue;

} else {

/* Continue packing by filling smallest cluster */

if(hack_frac_lut_no_legality) {

logical_block[next_blk].clb_index = num_clb - 1;

}

cur_pb = logical_block[next_blk].pb->parent_pb;

prev_blk = NO_CLUSTER;

#ifdef DEBUG_FAILED_PACKING_CANDIDATES

printf("PASSED:%s#%d ", logical_block[next_blk].name, next_blk);

#else

printf(".");

#endif

}

update_cluster_stats (next_blk, num_clb - 1, is_clock, global_clocks,

alpha, beta, timing_driven, connection_driven);

num_logical_blocks_clustered ++;

if (timing_driven && !early_exit) {

blocks_since_last_analysis++; /* historically, timing slacks were recomputed after X number of blocks were packed, but this doesn't significantly alter results so I (jluu) did not port the code */

}

}

if(logical_block[istart].type != VPACK_INPAD && logical_block[istart].type != VPACK_OUTPAD)

{

printf("\n");

}

save_cluster_solution();

if(hack_frac_lut_no_legality) {

k = 0;

for(i = 0; i < clb[num_clb-1].pb->pb_graph_node->num_input_ports; i++) {

for(j = 0; j < clb[num_clb-1].pb->pb_graph_node->num_input_pins[i]; j++) {

while(k < num_logical_nets) {

if(!clb[num_clb-1].pb->pb_stats.net_output_in_pb[k] && !vpack_net[k].is_global && clb[num_clb-1].pb->pb_stats.num_pins_of_net_in_pb[k] != 0)

{

rr_node[clb[num_clb-1].pb->pb_graph_node->input_pins[i][j].pin_count_in_cluster].net_num = k;

k++;

break;

}

k++;

}

}

}

/* check if nets are overused */

while(k < num_logical_nets) {

if(!clb[num_clb-1].pb->pb_stats.net_output_in_pb[k] && !vpack_net[k].is_global && clb[num_clb-1].pb->pb_stats.num_pins_of_net_in_pb[k] != 0)

{

printf(ERRTAG "Too many input nets used in cluster %s\n", clb[num_clb-1].name);

assert(0);

}

k++;

}

k = 0;

for(i = 0; i < clb[num_clb-1].pb->pb_graph_node->num_output_ports; i++) {

for(j = 0; j < clb[num_clb-1].pb->pb_graph_node->num_output_pins[i]; j++) {

while(k < num_logical_nets) {

for(m = 0; m <= vpack_net[k].num_sinks; m++) {

if(logical_block[vpack_net[k].node_block[m]].clb_index != num_clb - 1) {

break;

}

}

if(clb[num_clb-1].pb->pb_stats.net_output_in_pb[k] && (clb[num_clb-1].pb->pb_stats.num_pins_of_net_in_pb[k] < vpack_net[k].num_sinks + 1))

{

rr_node[clb[num_clb-1].pb->pb_graph_node->output_pins[i][j].pin_count_in_cluster].net_num = k;

k++;

break;

}

k++;

}

}

}

while(k < num_logical_nets) {

if(clb[num_clb-1].pb->pb_stats.net_output_in_pb[k] && (clb[num_clb-1].pb->pb_stats.num_pins_of_net_in_pb[k] < vpack_net[k].num_sinks + 1))

{

printf(ERRTAG "Too many output nets used in cluster %s\n", clb[num_clb-1].name);

assert(0);

}

k++;

}

k = 0;

for(i = 0; i < clb[num_clb-1].pb->pb_graph_node->num_clock_ports; i++) {

for(j = 0; j < clb[num_clb-1].pb->pb_graph_node->num_clock_pins[i]; j++) {

while(k < num_logical_nets) {

if(!clb[num_clb-1].pb->pb_stats.net_output_in_pb[k] && vpack_net[k].is_global && clb[num_clb-1].pb->pb_stats.num_pins_of_net_in_pb[k] != 0)

{

rr_node[clb[num_clb-1].pb->pb_graph_node->clock_pins[i][j].pin_count_in_cluster].net_num = k;

k++;

break;

}

k++;

}

}

}

while(k < num_logical_nets) {

if(!clb[num_clb-1].pb->pb_stats.net_output_in_pb[k] && vpack_net[k].is_global && clb[num_clb-1].pb->pb_stats.num_pins_of_net_in_pb[k] != 0)

{

printf(ERRTAG "Too many clock nets used in cluster %s\n", clb[num_clb-1].name);

assert(0);

}

k++;

}

}

if (timing_driven){

if (num_blocks_hill_added > 0 && !early_exit) {

blocks_since_last_analysis += num_blocks_hill_added;

}

if (cluster_seed_type == VPACK_TIMING){

istart=get_most_critical_unclustered_block();

}

else { /*max input seed*/

istart = get_seed_logical_block_with_most_ext_inputs(max_primitive_inputs);

}

}

else /*cluster seed is max input (since there is no timing information)*/

istart=get_seed_logical_block_with_most_ext_inputs(max_primitive_inputs);

free_pb_stats_recursive (clb[num_clb-1].pb, num_models);

}

if (timing_driven){

free_timing_graph(net_slack);

}

free_cluster_legality_checker();

alloc_and_load_cluster_info (num_clb, clb);

check_clustering (num_clb, clb, is_clock);

output_clustering( clb, num_clb, global_clocks, is_clock, out_fname, FALSE);

#ifdef DUMP_BLIF_ECHO

if(!hack_frac_lut_no_legality) /* must have routing graph before dumping blif */

output_blif( clb, num_clb, global_clocks, is_clock, "post_packing_blif.echo", FALSE);

#endif

if(hill_climbing_flag)

{

free (hill_climbing_inputs_avail);

}

for(i = 0; i < num_clb; i++) {

free_cb(clb[i].pb, num_models);

free(clb[i].name);

free(clb[i].nets);

free(clb[i].pb);

}

free(clb);

free (num_used_instances_type);

free (num_instances_type);

free (unclustered_list_head);

free (memory_pool);

free (net_output_feeds_driving_block_input);

}

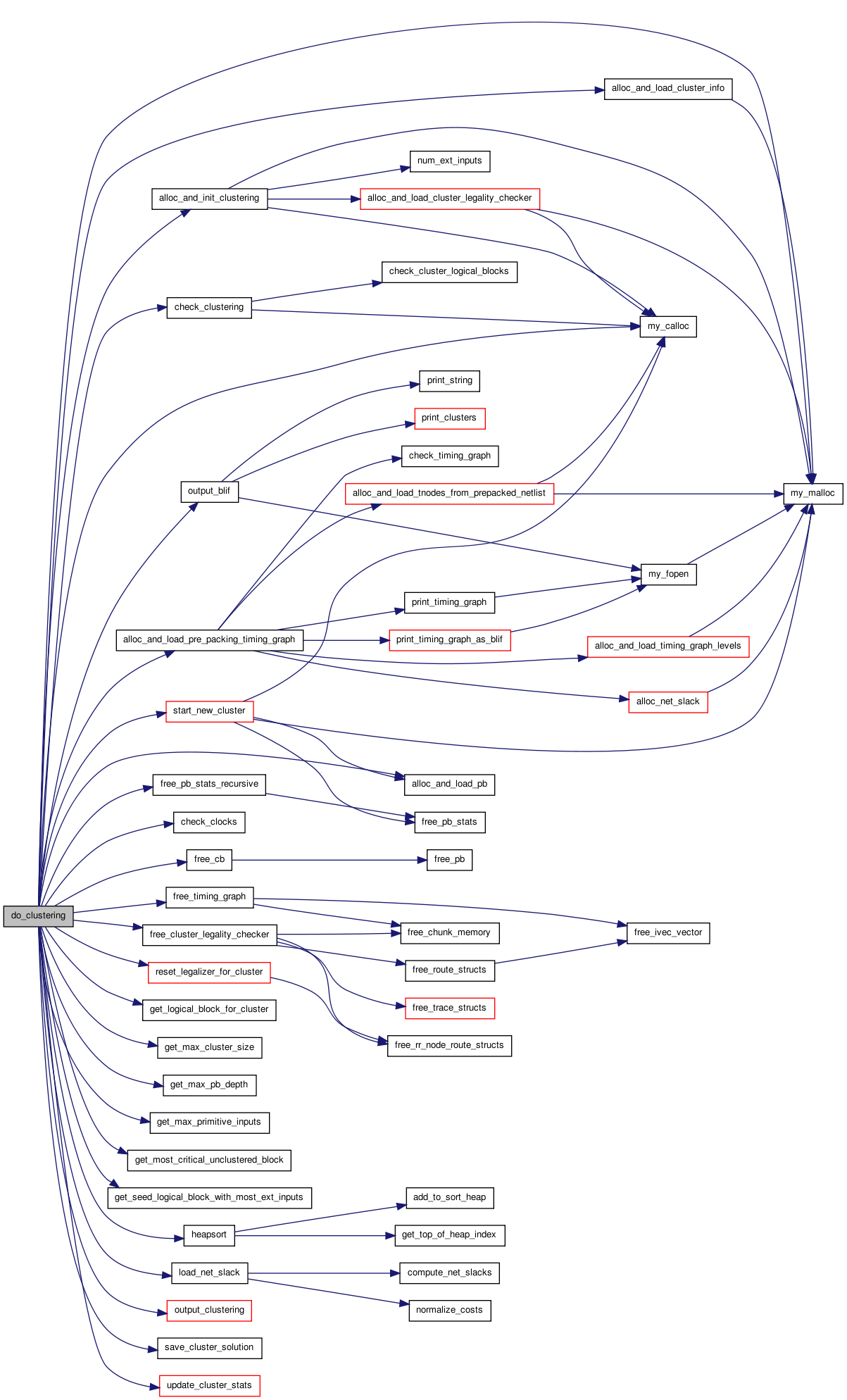

Here is the call graph for this function:

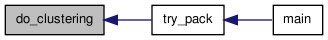

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:| int get_cluster_of_block | ( | int | blkidx | ) |